What is network latency? Causes, tests & fixes

Network latency creates gaps in performance that slow down workflows and disrupt enterprise connectivity. Teams need to identify where delays start, how often they happen, and what fixes actually work across real environments.

What is network latency?

Network latency is the time it takes for data to travel from one point to another across a network. We measure latency in milliseconds (ms). It represents the round-trip delay between a sender and receiver.

Low latency means fast response. High latency leads to delays in apps, video calls, and file loads.

Key features of network latency

Latency shows up as a delay measured in milliseconds. Those milliseconds matter.

A few milliseconds won’t matter for loading a website. But it can break a video call or game session. Real-time applications need consistent low latency.

Latency isn’t one hop. It builds up across routers, ISPs, and long physical distances. The longer the route, the more the delay.

Many confuse latency with bandwidth, but they’re different. Bandwidth is how much data your network can carry. Latency is how long that data takes to move.

Several things affect latency:

- The network protocol (UDP is faster than TCP)

- The amount of congestion

- Hardware bottlenecks in switches, modems, or firewalls

Even small issues can compound across the network.

How does network latency work?

Network latency works by tracking the total delay in a request-and-response cycle across a network. Every time you click a link or send a message, your device starts the process by sending a packet to a remote server.

Packets pass through network devices such as switches, routers, and ISPs. A server receives the request, processes it, and generates a response. Your device receives the response as it returns through the network.

Here’s how the cycle works during a typical browser request:

- You click on a product page

- Your device sends a packet to the server

- The packet travels through switches, routers, and ISPs

- The server receives the request and generates a response

- The response returns through the same or a similar path

- Your browser loads the page

Network latency is the total round-trip delay, measured in milliseconds. High latency makes the process feel slow. Low latency keeps interactions fast.

Network latency vs. bandwidth vs. throughput

Network latency, bandwidth, and throughput are three separate metrics. Many people confuse them, but each one measures a different part of network performance.

The chart below shows the differences between what each of the metrics measures:

High bandwidth does not guarantee good throughput if latency or packet loss slows data delivery. Low latency does not always mean good performance. Network congestion or bandwidth limits can still cause delays.

Each metric matters, but low latency makes the biggest difference for real-time services. Video calls, remote work tools, and cloud apps all need quick back-and-forth responses.

For more on how these pieces connect, explore speed vs. bandwidth, and network throughput vs. latency.

What causes high latency?

Technical and physical delays cause high latency by slowing down data as it travels across a network. Some delays are easy to fix. Others depend on network design, location, or hardware quality.

Geographic distance between devices

Longer distances increase latency. Data takes more time to travel when the source and destination are far apart. Signals must cross undersea cables, satellites, or land-based infrastructure. Each added mile introduces more delay.

Global networks often experience high latency. Businesses can reduce this delay by using a content delivery network (CDN) or placing edge servers closer to users.

Network congestion during peak usage

Congestion increases latency by overloading network devices. High-traffic periods crowd routers and switches, which creates delays. Busy networks slow down packet processing. Routers fill their buffers, delay traffic, or drop packets when demand exceeds capacity.

Large downloads and live events often cause spikes in latency. Enterprise backups and scheduled syncs also contribute to network slowdowns.

High bandwidth does not prevent latency if congestion management is weak. Networks need traffic shaping and quality of service (QoS) to stay responsive.

Outdated or overloaded hardware

Old or misconfigured hardware increases latency. Slow switches, aging routers, and legacy firewalls take longer to process traffic.

Underpowered devices struggle to inspect and forward packets efficiently. Hardware bottlenecks and firmware bugs can also create jitter and spikes in delay.

Many business networks still rely on outdated equipment. Older gear cannot handle modern traffic loads or application demands.

Upgrading core hardware helps reduce latency and stabilize performance.

Inefficient routing paths

Routing paths directly affect network latency. Traffic slows down when it takes longer or less direct routes.

ISPs use BGP to select paths based on cost or policy. These decisions often send data through indirect or congested routes.

Each hop adds delay. Multi-hop paths increase latency with every additional router.

Routing loops and policy errors multiply delays. Misconfigured networks can double or triple the travel time for packets.

Networks without route visibility cannot detect these problems. Poor monitoring leaves inefficient paths unchecked.

Firewalls and proxies

Firewalls and proxies increase latency by inspecting or rerouting traffic. Each security step adds processing time before data reaches its destination. Firewalls scan packet headers and apply filtering rules. Some firewalls also proxy the entire connection, which adds more delay.

Inline threat detection systems introduce noticeable slowdowns. Proxy chains and legacy VPNs often add tens of milliseconds to each request.

Latency gets worse when these tools sit between branch offices and cloud services. Traffic passes through more checkpoints before reaching its target.

Protocol overhead and handshake delays

Protocols add latency when they require multiple setup steps. TCP starts every session with a three-way handshake before sending data.

Encrypted connections add more overhead. TLS and SSL introduce extra handshakes that increase delay.

Applications like HTTPS, VoIP, and streaming often use stacked protocols. Each added layer increases total latency.

Unoptimized protocol stacks slow down response times even on high-bandwidth networks. More layers mean more waiting.

Ways to measure network latency

A network latency test checks how long it takes for data to travel between devices. You can learn how to measure network latency by using common round-trip time tools.

Tools to run latency tests

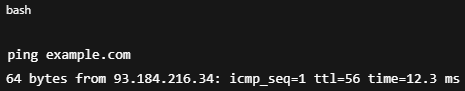

Ping sends a small packet to a target server and measures the round-trip time. Most operating systems include it by default.

Traceroute maps each hop between your device and the destination. You can see where latency increases along the path.

Speedtest.net and Fast.com report latency as part of their speed tests. Both are useful for quick checks on home or branch networks.

Wireshark captures raw packet data and shows timestamps for each transfer. Network admins use it to analyze delays in detail.

ThousandEyes and similar platforms measure latency across distributed systems. Enterprise teams use these tools to monitor external paths. Separate dashboards help track internal latency as well.

Meter includes latency insights directly in the network dashboard. The platform displays round-trip time by site and flags abnormal delays. Teams use that data to find and fix performance issues quickly.

Round-trip time (RTT) in milliseconds is the standard way to measure latency. Lower numbers mean faster response.

Use these ranges as a reference:

- Low latency: under 50 ms

- Moderate latency: 50–150 ms

- High latency: over 150 ms

Here’s a quick example:

A result of 12.3 ms indicates excellent latency. The connection responds quickly with minimal delay.

What’s a good latency speed?

A good latency speed stays under 50 milliseconds for most tasks. Latency under 50 milliseconds supports fast-loading web apps and clear video calls.

Real-time services work best with latency under 20 milliseconds. Voice calls, gaming, and live streaming need fast responses to stay smooth.

Delays over 150 milliseconds create noticeable lag and buffering. Users feel the difference during interactive sessions.

Aim for these latency times depending on your use case:

Teams should monitor latency regularly to catch issues early. Lifecycle management helps track latency during site changes and equipment upgrades.

How to improve network latency: 7 tips

You can improve latency by removing delays in how data moves across the network. Most fixes target hardware, routing, or protocol choices. Start with what you can control, then expand to infrastructure and service providers.

1. Use wired connections instead of Wi-Fi

Wired connections reduce latency by eliminating interference. Wi-Fi introduces retry cycles and signal drops. Ethernet provides more consistent delivery.

2. Upgrade routers, switches, and modems

Modern hardware processes packets faster. Old devices slow down traffic and increase delays. Upgrading reduces bottlenecks and keeps latency low.

3. Enable Quality of Service (QoS) settings

QoS settings prioritize latency-sensitive traffic. Real-time applications like voice and video need consistent speed. Routers with QoS reduce lag by managing traffic flow.

4. Choose low-latency ISPs or add CDN support

ISPs with optimized routing paths reduce travel time for data. CDNs place content closer to users. Both options help cut round-trip delays.

5. Optimize DNS settings and routing paths

Fast DNS resolvers speed up lookups. Shorter BGP paths reduce unnecessary hops. Together, they lower the total round-trip time.

6. Use edge computing to shorten data travel

Edge servers process requests near the user. Shorter distances mean faster responses. Businesses with remote users benefit the most.

7. Reduce protocol overhead

Lightweight protocols cut handshake delays. Use UDP instead of TCP when possible. Avoid unnecessary encryption layers if speed is more important than privacy.

Track latency as part of your ongoing network management. Long-term improvements depend on visibility. A strong network management plan helps teams measure and fix delays over time.

Common latency issues and troubleshooting

Troubleshooting network latency starts with recognizing the symptoms.

Most latency issues come from avoidable delays in routing, hardware, or configuration. You can solve them by pinpointing the root cause and applying the right fix.

Lag in video calls

High latency or packet loss disrupts real-time communication. Video and audio may freeze or drop.

Switch to a wired ethernet connection to improve stability. Enable QoS settings on your router to prioritize video traffic.

High ping in online games

Poor ISP routing or peak-hour congestion increases latency. Players experience input delay or rubberbanding.

Choose a low-latency ISP or try a gaming VPN that offers better routing paths.

Buffering during streaming

Slow DNS resolution or a CDN miss delays video playback. The player struggles to load content quickly.

Use a faster DNS provider and check your router’s configuration. Some routers may throttle streaming traffic.

Packet loss or jitter

Faulty cables or overloaded network devices drop packets. Jitter causes inconsistent delays between packets, which disrupts performance.

Replace damaged cables and eliminate sources of interference. Restart or upgrade underperforming network hardware.

Should you monitor latency?

You should monitor latency to keep business-critical services fast and reliable. Applications like SaaS platforms, VoIP calls, and video streaming depend on low latency to perform well.

High latency creates visible problems before systems fail. Users notice lag, freezing, or delay even if the network stays online.

Ongoing monitoring helps teams catch issues early. You can troubleshoot problems before users report them or performance drops.

Latency monitoring is essential during network changes. Track metrics during office moves, site launches, hardware upgrades, or ISP transitions.

Use network performance factors to compare latency against bandwidth and throughput.

Frequently asked questions

What causes high latency?

High latency happens when data slows down across the network. Common causes include long distances, network congestion, outdated hardware, inefficient routing, firewall delays, and protocol overhead.

How do I reduce latency?

You can reduce latency by improving how data moves through your network. Use wired connections, upgrade your hardware, enable QoS settings, integrate a CDN, and shorten routing paths to lower delay.

What’s the difference between latency and bandwidth?

Latency measures how long it takes data to travel, while bandwidth measures how much data you can send. A network can have high bandwidth and still suffer from poor latency if delays occur.

How does latency affect gaming?

Latency affects gaming by causing lag and delayed responses. High ping makes controls feel slow and disrupts real-time gameplay.

Can VPNs reduce or increase latency?

VPNs can increase latency by adding routing steps and encryption overhead. Some VPNs reduce latency if they route traffic more efficiently than your ISP.

What are common tools for measuring latency?

You can measure latency with tools like Ping, Traceroute, Speedtest, and Wireshark. Enterprise teams often use advanced monitoring tools like ThousandEyes for broader visibility.

What is the best network latency monitoring tool?

The best latency monitoring tool depends on your network size and goals. Use Ping or Speedtest for basic checks. Use ThousandEyes or similar platforms for large-scale, multi-site tracking.

How Meter solves your network latency issues

Network latency slows down business-critical tools and frustrates users. Most teams spend hours chasing the cause without full visibility or control. Meter removes that burden by designing and managing networks that keep latency low.

Key features of Meter enterprise networking solution include:

- Full integration: Meter-built access points, switches, security appliances, and power distribution units work together to create a cohesive, stress-free network management experience.

- Managed experience: Meter provides proactive user support and done-with-you network management to reduce the burden on in-house networking teams.

- Hassle-free installation: Simply provide an address and floor plan, and Meter’s team will plan, install, and maintain your network.

- Software: Use Meter’s purpose-built dashboard for deep visibility and granular control of your network, or create custom dashboards with a prompt using Meter Command.

- OpEx pricing: Instead of investing upfront in equipment, Meter charges a simple monthly subscription fee based on your square footage. When it’s time to upgrade your network, Meter provides complimentary new equipment and installation.

- Easy migration and expansion: As you grow, Meter will expand your network with new hardware or entirely relocate your network to a new location free of charge.

To learn more, schedule a demo with Meter.